Future AirPods could get lasers to read the wearer's lips & process requests

Apple is researching how AirPods could use sensors like the ones used in Face ID to read the user's lips and process what the user wants, even if there isn't a spoken request.

Apple's patent covers both over the ear AirPods Max (left) and in-ear AirPods Pro -- image credit: Apple

Apple has been looking into the idea of AirPods detecting gestures for more than five years, including how such detectors could learn a user's unique movements. In 2024, the first significant result of this research came with how AirPods Pro could detect when a user nods or shakes their head.

Now in a newly granted patent, Apple has revealed that it is aiming to go further. The "Wearable skin vibration or silent skin gesture detector" patent proposes using what it calls self-mixing interferometry to recognize more nuanced gestures.

Beyond full head movements like a nod or shake, much smaller ones such as a smile, or a whispered command, could be detected. Deformations in the skin, or skin and muscle vibrations, could be spotted and interpreted by the interferometry sensor.

Apple does take its usual patent approach and tries to establish precedent for the technology in everything from AirPods to eye glasses. But in each case, the idea is that as a user speaks, the movement of the jaw and cheeks is detectable.

It's detectable through the use of a Vertical Cavity Surface Emitting Laser (VCSEL) in the frame of the device. The idea is that the VCSEL emitter and sensor, similar to the combo used in Face ID, could be in the frame of the device. And, users could select how the AirPods react to different skin and lip movements picked up by that combination of emitter and sensor.

In the case of AirPods that go inside the ear, instead of solely over the ear AirPods Max, Apple also says that "the self-mixing interferometry sensor may direct the beam of light toward a location in an ear of the user."

When that light and its reflection back to the sensor alters, it will be because of head or skin and muscle movement. The patent is then specifically about methods by which such movement could be detected, but beyond the specifics, there are two clear benefits.

One is that movement detection allows for what Apple calls silent commands. Currently AirPods Pro support a silence nod or shake of the head to accept or reject phone calls, but they could be set to interpret a mouth movement as meaning "skip track."

Apple says that this is specifically to get around situations where a user may be unable to input a command to the wearable electronic device without being heard. But then it can also be useful to detect skin or head vibrations when the user can speak -- because that movement can be authentication.

Right now, headphones and devices like the Apple Watch will detect a user's voice or they will respond to the press of a real or virtual button. Yet all of this comes with limitations.

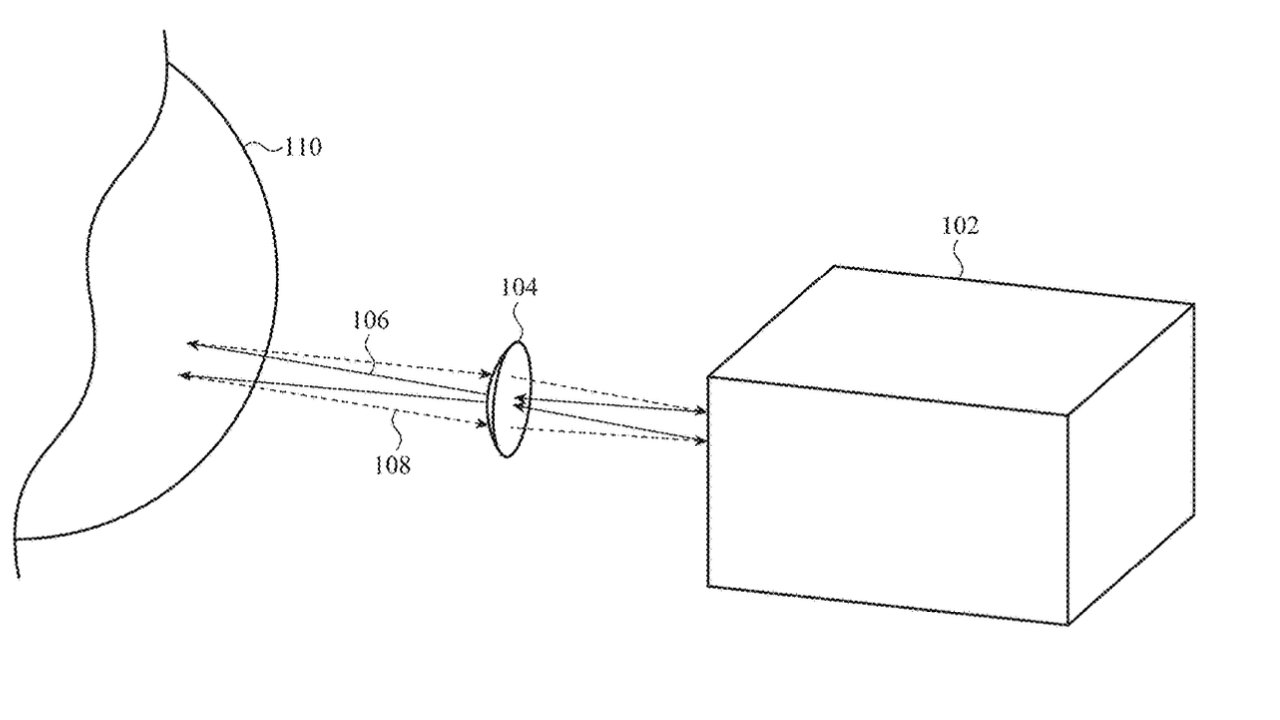

Detail from the patent showing how light from a sensor could detect ear and skin movement -- image credit: Apple

Specifically, voice recognition needs to start with being sure that it is the AirPods owner who is speaking. And on-screen or physical buttons need a user to have a hand free to press them.

So under this proposed technology, if an AirPods wearer calls for Siri out loud, the AirPods could check for this movement. At the most basic, if there is no such skin vibration when the audible command is received, the AirPods can conclude it was not spoken by the user.

Consequently, whether a user calls out a command, whispers it, or even just mouths it, AirPods could respond.

This newly-granted patent is credited to two inventors, including Mehmet Mutlu. He is also credit on a 2021 patent application that looked at using ultrasonic sensors to authenticate user voices on Apple Watch.

Read on AppleInsider

Comments